Introduction: The Token Economy of LLMs

With the rapid adoption of Large Language Models (LLMs) in real-world applications, developers face a new kind of performance bottleneck token cost. Unlike traditional APIs that charge per request or data size, LLMs charge per token, a unit of text the model processes. Whether you’re building with OpenAI’s GPT, Anthropic’s Claude, or open-source models like LLaMA, reducing token count is critical to control costs and scale efficiently.

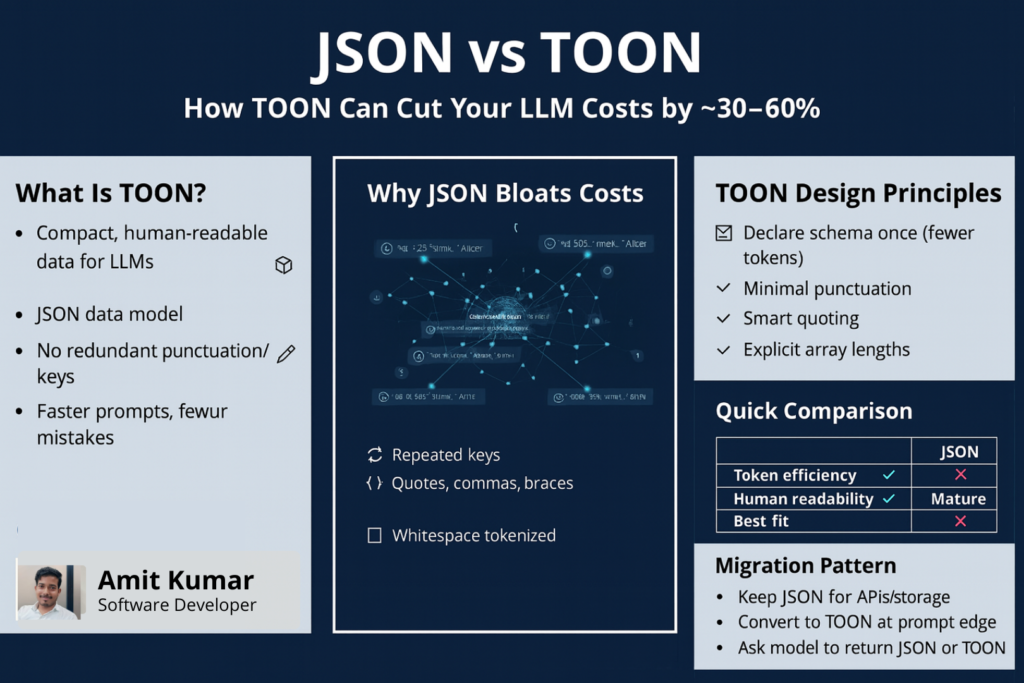

What is TOON (Token-Oriented Object Notation)?

TOON is a data format designed for LLMs. It preserves JSON’s data model—objects, arrays, and primitives—but eliminates redundant structure. Instead of repeating field names for every object, TOON separates schema from values. It’s:

Human-readable

Token-efficient

Schema-first

Whitespace-structured

Think of it as CSV meets JSON, but optimized for LLM tokenization. TOON is not about replacing JSON in APIs or databases—it’s about reducing bloat at the point where it matters most: the LLM prompt.

Why JSON Is Expensive in the LLM Era

Let’s take a simple example:

This format includes:

- Quotes for every key and string value

- Commas and braces for structure

- Repetition of

"id","name", and"role"on every row

Now multiply this over thousands of records and you’re looking at thousands of wasted tokens.

Why does this matter?

- Tokens = Cost: LLMs like GPT-4-turbo charge per 1,000 tokens.

- Tokens = Latency: Bigger prompts take longer to process.

- Tokens = Limits: You hit model context limits faster.

TOON’s Design Philosophy: Built for Prompts

TOON’s core idea is compact structure without loss. Here’s what that looks like:

1. Schema Declaration

Rather than repeating keys, TOON defines the structure up front—like column headers.

Here, users[2] tells the model that two user objects follow, each with id, name, and role. There’s no punctuation or quotes unless absolutely necessary.

2. Minimal Punctuation

TOON relies on whitespace, not braces or commas, to define structure. This means:

- No

{},[], or, - Only indentation and line breaks

3. Smart Quoting

Strings are only quoted if:

- They contain control characters

- They have leading/trailing whitespace

- They’re ambiguous (e.g., contain tabs or look like numbers)

This reduces tokens dramatically.

4. Explicit Array Lengths

TOON headers can include counts, like products[1000]. This helps the model anticipate input structure and prevents formatting errors during generation.

Real-World Savings: Benchmarks and Results

Several independent benchmarks have shown that TOON reduces token count by 30–60% for structured data. These savings are especially impactful when:

- Using RAG pipelines that process documents into embeddings

- Feeding tabular data into evaluation/grading models

- Streaming logs, events, or product lists into prompts

Here’s what’s been reported:

- 40% token reduction for user records

- 60% fewer tokens for Q&A banks

- 4–5% increase in output accuracy due to reduced formatting drift

Let’s say your prompt is 10,000 tokens in JSON. With TOON, it may shrink to 5,000–7,000 tokens—slashing inference cost and latency.

Migration Strategy: Keep JSON, Adopt TOON at the Edge

TOON isn’t about rewriting your backend. It’s about transforming payloads at the LLM boundary. A typical pattern looks like this:

- Store and expose data in JSON (for APIs, databases, inter-service communication)

- Convert JSON to TOON just before sending the data to an LLM

- Receive output in JSON or TOON as needed

This lets you preserve compatibility while optimizing for token cost.

As someone working with GitLab CI/CD, I’ve automated this conversion in pipelines where prompts are dynamically generated. Using NodeJS or Python scripts, I convert batched JSON payloads into TOON before LLM inference, reducing billing by ~35% without touching upstream systems.

TOON vs JSON: Feature Comparison

| Feature | TOON | JSON |

|---|---|---|

| Token Efficiency | High (30–60% fewer tokens) | Low (verbose syntax) |

| Human Readability | Table-like, minimal clutter | Familiar, but punctuation-heavy |

| Ecosystem Support | Emerging (open-source tools) | Mature, universal |

| Best Use Cases | LLM prompts, tabular data | APIs, complex/nested data |

| Schema Clarity | Header-based, compact | Repeated keys per object |

When to Use TOON (and When Not To)

✅ Use TOON When:

- You’re working with uniform datasets (users, products, logs)

- You want guardrails (e.g., array counts) to reduce hallucinations

- You’re optimizing cost-sensitive pipelines

❌ Avoid TOON When:

- Data is deeply nested or irregular

- You need broad compatibility across tools

- You’re building public-facing APIs

Real-World Use Cases

In my projects involving full-stack development and DevOps automation, TOON shines in:

- RAG Pipelines: When querying vector databases, passing TOON-formatted contexts lets you include more information in fewer tokens.

- LLM-based Grading Systems: Reducing token size lets you evaluate more responses per batch.

- Event Streams: If you’re sending logs or sensor data to models, TOON compresses the format without losing semantics.

For example, in a Kubernetes monitoring tool I worked on, logs were parsed and passed to an LLM for anomaly detection. Switching from JSON to TOON reduced token usage by 52%, cutting monthly inference costs by over ₹20,000.

Common Questions

Is TOON lossy?

No. TOON is a lossless format you can always convert back to JSON.

Is TOON widely supported?

Tooling is growing. Open-source TOON parsers exist for Python, JS, and CLI. You can also build lightweight converters.

Does TOON always save 60%?

No. It depends on structure. Uniform datasets see the biggest gains irregular ones less so.

Can TOON be generated from JSON automatically?

Yes. You can use schema detection + transformation scripts (in NodeJS, Python, or Go) to convert existing JSON into TOON.

Developer Tips: Integrating TOON in Your Stack

Here’s how I’ve incorporated TOON in my DevOps and backend pipelines:

- In NodeJS middleware, I detect tabular payloads and convert to TOON before hitting the model endpoint.

- In GitLab CI/CD, I use a TOON CLI tool to reformat logs before passing them into prompt-based monitoring tools.

- On AWS Lambda, I batch transform JSON into TOON for prompt efficiency.

These small changes delivered major cost savings without altering any core business logic.

Conclusion: Embrace the Token Frontier

The rise of LLMs like Tool GPT forces us to rethink how we format data. JSON, while simple and powerful, is not cheap in a tokenized world. TOON offers a smarter, leaner alternative cutting token costs, speeding up inference, and improving output quality.

For developers and engineers managing both performance and budget, TOON isn’t just a data format. It’s a strategic optimization. Adopt it where it matters right before the model call and unlock efficient, scalable AI pipelines.