Exploring LLaMA 3.2 Next-Generation AI

Welcome to an exciting guide on LLaMA 3.2! Designed to take AI to the next level, this model combines the best of text and vision capabilities with lightweight options for mobile devices. Let’s dive into what makes it different from LLaMA 3.1 and how to configure it on your local machine. This guide is written in simple English, making it perfect for beginners in India who want to explore AI!

January 8, 2025

Harpal Singh | Ninja

rajanbabrah056@gmail.com

AI Researcher | Tech & Dev | M.tech Candidate

I am currently pursuing my Master of Technology (M.Tech) with a focus on Artificial Intelligence and its applications in innovative technologies. Over the course of my academic and professional journey, I have completed four internships that have equipped me with a diverse set of skills in both Full Stack and .NET development.

×

Contact Harpal Singh | Ninja

Table of Contents

What Makes LLaMA 3.2 Different from LLaMA 3.1?

LLaMA 3.1 was a powerful text-based AI model, but LLaMA 3.2 takes a giant leap forward with these game-changing features:- Vision Models

- New Additions: LLaMA 3.2 introduces vision-enabled models with 11B and 90B parameters.

- Multimodal Capabilities: These models understand both text and images, making them perfect for tasks like:

- Image captioning: Generate captions for photos or diagrams.

- Visual question answering: Answer questions about charts, graphs, or maps.

- Document understanding: Summarize visual data like sales trends or geographical maps.

- Lightweight Models for Edge Devices

- 1B and 3B Parameters: Small, efficient models designed to run on mobile and edge devices.

- Benefits:

- Faster Response: Works locally, avoiding delays caused by the internet.

- Enhanced Privacy: No data is sent to the cloud.

- Benefits:

- 1B and 3B Parameters: Small, efficient models designed to run on mobile and edge devices.

- LLaMA Stack

- Simplifies deployment with standardized APIs for on-prem, cloud, or mobile environments.

- Makes it easy to fine-tune and deploy LLaMA models with pre-built solutions.

- Extended Context Length

- Supports up to 128K tokens, making it ideal for handling longer documents or conversations.

Why Choose LLaMA 3.2?

- For Students: Simplify class notes, answer questions, and even write essays.

- For Developers: Create intelligent apps that process both text and images.

- For Businesses: Automate data analysis, document processing, and more.

How to Access LLaMA 3.2

LLaMA 3.2 can be accessed in several ways, depending on your needs. But don’t worry – we’ll focus on the easiest and most efficient way: using the Hugging Face cloud API. Ways to Access LLaMA 3.2:| Access Method | Description | Ease of Use | Pricing |

|---|---|---|---|

| Cloud API (Hugging Face) | The simplest way to access LLaMA 3.2 via Hugging Face’s hosted models and API. No setup required! | Very Easy | Free (Limited), $9.99/month (Pro), $99/month (Team) |

| Download & Host Locally | Download the LLaMA 3.2 model and run it on your own computer/server. Requires more setup. | Harder (Requires technical knowledge) | Free, but requires powerful hardware |

| Custom SDK Integration | Use Hugging Face’s SDK to integrate LLaMA 3.2 directly into your app. Requires some programming knowledge. | Medium | Depends on usage |

Pricing Breakdown

Let’s take a look at the different pricing options offered by Hugging Face for cloud-based access to LLaMA 3.2. This will help you choose the plan that fits your needs:| Pricing Plan | Access Type | Features | Price |

|---|---|---|---|

| Free Plan | Cloud API | 1,000 requests per month, limited model access. | Free |

| Pro Plan | Cloud API | 50,000 requests per month, priority support, advanced features. | $9.99/month |

| Team Plan | Cloud API | Unlimited requests, collaboration tools, model management for teams. | $99/month per user |

| Downloadable Model | Local Deployment | Free to download, requires running on your own hardware. | Free (with hardware costs) |

- Easy Setup: No need to worry about downloading heavy models or managing servers. Hugging Face takes care of it all.

- Scalable: You can start with the free plan and upgrade later if your needs grow.

- Cost-Effective: The cloud API lets you pay only for what you use, making it affordable for small projects.

- No Hardware Requirements: You don't need powerful servers or GPUs to get started.

Step-by-Step Guide: Using LLaMA 3.2 on Hugging Face

Now, let’s dive into the practical steps of using the LLaMA 3.2 model with Hugging Face’s cloud API. We’ll go through everything clearly, so even if you’re a beginner, you’ll feel confident using LLaMA 3.2 in no time.Step 1: Register for Hugging Face - "Getting Your AI Passport"

Before you can explore and use Hugging Face's powerful models, you'll need to create an account. This will grant you access to the platform's tools and resources, setting the foundation for your AI journey. Instructions for Registration:

Instructions for Registration:

- Create an Account:

- Visit the Hugging Face website and select the "Sign Up" option located in the upper right corner.

- Fill in the required details (email, username, password) to create your account.

- Complete Account Setup:

- Verify your email address if needed and log into your account once it’s activated.

- Explore the Platform:

- Once logged in, you’ll have access to Hugging Face’s wide variety of models, datasets, and tools.

Step 2: Request Access to the LLaMA 3.2 Model - "Unlocking the Gateway"

Now that you’ve created your Hugging Face account, it's time to request access to the LLaMA 3.2 models. Here’s how to submit your request for access:

Here’s how to submit your request for access:

- Locate the "Expand to review" Button:

On the model page (e.g., LLaMA 3.2 Text Model or LLaMA 3.2 Vision Model), scroll down to find the “Expand to review” section.

- Click on Expand to review, open the form, and review the terms.

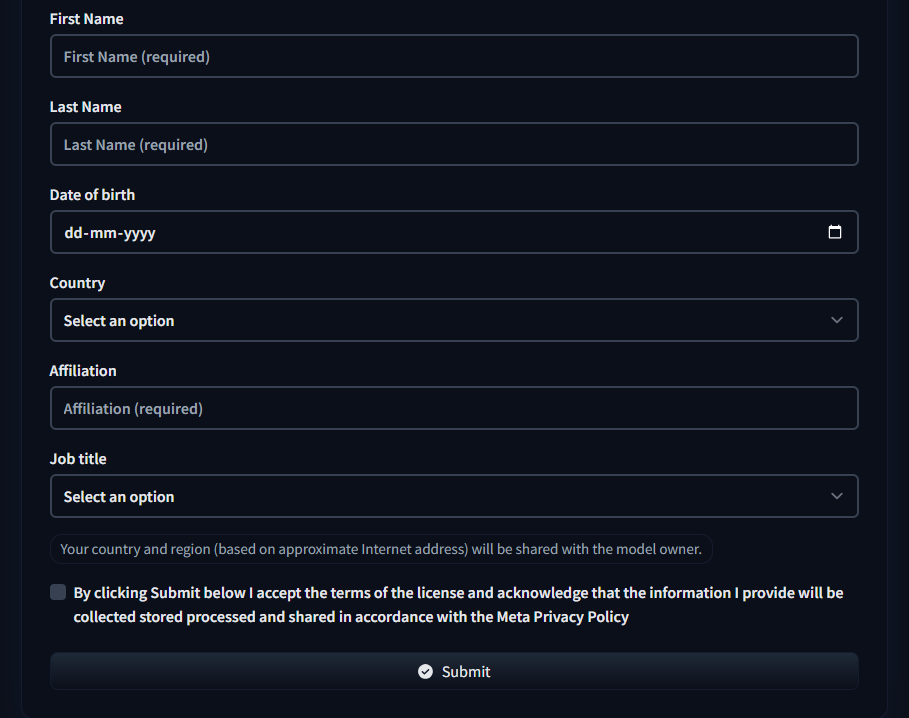

- Complete the Request Form:

A form will appear where you need to fill out the following details (as shown in the second image you provided):

- First Name

- Last Name

- Date of Birth (in dd-mm-yyyy format)

- Country (select your country from the dropdown list)

- Affiliation (select your organization or entity)

- Job Title (choose your job title)

- Consent to License Terms (tick the box to accept the terms of the license)

After filling out the form, click the "Submit" button to send your request.

After filling out the form, click the "Submit" button to send your request.

- Confirmation of Submission: After submitting the form, you'll see a message confirming that your request has been sent to Hugging Face for review (as shown in the third image you uploaded You’ll see a confirmation message similar to this after submitting.

You can check the status of your access request in your settings page.

You can check the status of your access request in your settings page.

Step 3: Generate and Store Your Hugging Face API Key - "Securing Your AI Key"

After gaining access to the models, you’ll need an API key to authenticate your requests. Think of it as a "password" that allows you to communicate securely with the models. Instructions for Generating and Storing the API Key:- Generate Your API Key:

- Go to your Hugging Face account settings by clicking on your profile icon.

- Under Settings, click on Access Tokens.

- Click Create New Token and give it a name (e.g., "LLaMA 3.2 Token").

- Once the token is generated, copy it. You’ll need this in the next steps for secure access to the models.

Beginner-Friendly Guide: Creating a Simple AI Text Generator API with Node.js using Hugging Face Inference API

In this guide, we’ll build a simple API that can generate text using the LLaMA 3.2 model from Hugging Face. We’ll do this step-by-step, and even if you're new to programming, we’ll guide you through it! What You’ll Need:- Node.js installed on your computer (if you don't have it yet, you can download it here).

- A Hugging Face account to access the LLaMA model and get an API key.

- Use Postman or a comparable tool to test your API requests.

Step 1: Getting Started with Your Node.js Project

First, let’s create a fresh Node.js project. This step will prepare your environment and set up everything needed for your application, so you can start using the Hugging Face models with ease.- Start by Creating a New Folder for Your Project: Open your terminal or command prompt and create a new folder where your project will live. You can call it huggingface-api or whatever you like.

- Initialize the Project: Run the following command to set up a new Node.js project. It will create a package.json file where your project’s details and dependencies will be stored.

- Install the Required Libraries: We’ll be using the Express framework to create our API, and we’ll use @huggingface/inference to interact with the Hugging Face models.

-

- express: For setting up the web server.

- @huggingface/inference: To interact with Hugging Face's API.

- dotenv: For safely storing your Hugging Face API key in a .env file.

- body-parser: To handle incoming JSON requests.

Step 2: Set Up the Environment Variables

For security, we’ll store the API key in a .env file.- Create a .env File in your project folder and add the following line:

- Update Your Code to Use This Key:

- In your code, we’ll use the dotenv library to load the key from the .env file.

Step 3: Write the Code for the API

Let’s set up the Express server and code the API that will interact with Hugging Face's model.- Create a file called app.js in your project folder.

- Add the following code to app.js:

Step 4: Run the Server

Now, you’re ready to start the server.- In your terminal, run the following command:

The server will start on port 5000 (or the port specified in your .env file). It will listen for incoming POST requests on the /generate endpoint.

The server will start on port 5000 (or the port specified in your .env file). It will listen for incoming POST requests on the /generate endpoint.

Step 5: Test the API

To test the API, you can use Postman or cURL to send a POST request.- Postman:

- Set the request type to POST.

- Set the URL to http://localhost:5000/generate.

- In the Body tab, choose raw and JSON format, then add this JSON:

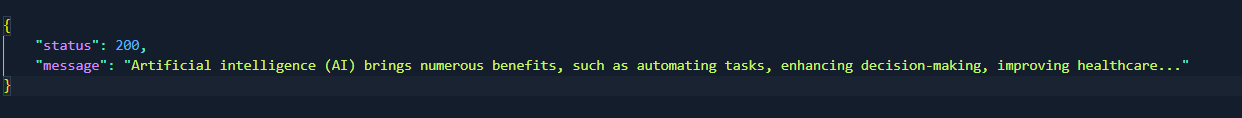

Expected Response:

If everything is working correctly, you should receive a response like this:

Expected Response:

If everything is working correctly, you should receive a response like this: